Final project: Multi resolution reconstruction with structured light

Introduction

The idea of this project is to obtain a multi resolution reconstruction using a projector and multiple cameras.Two questions need to be addressed while doing the multi resolution reconstruction. 1.How to refine the locations of the 3D points using the information from multiple cameras. 2.How to handle differing occlusions i.e points visible in some cameras but not in the others.

In the proposed approach,these two issues are addressed by reducing the problem to a 1D peak detection problem.

1 Set up

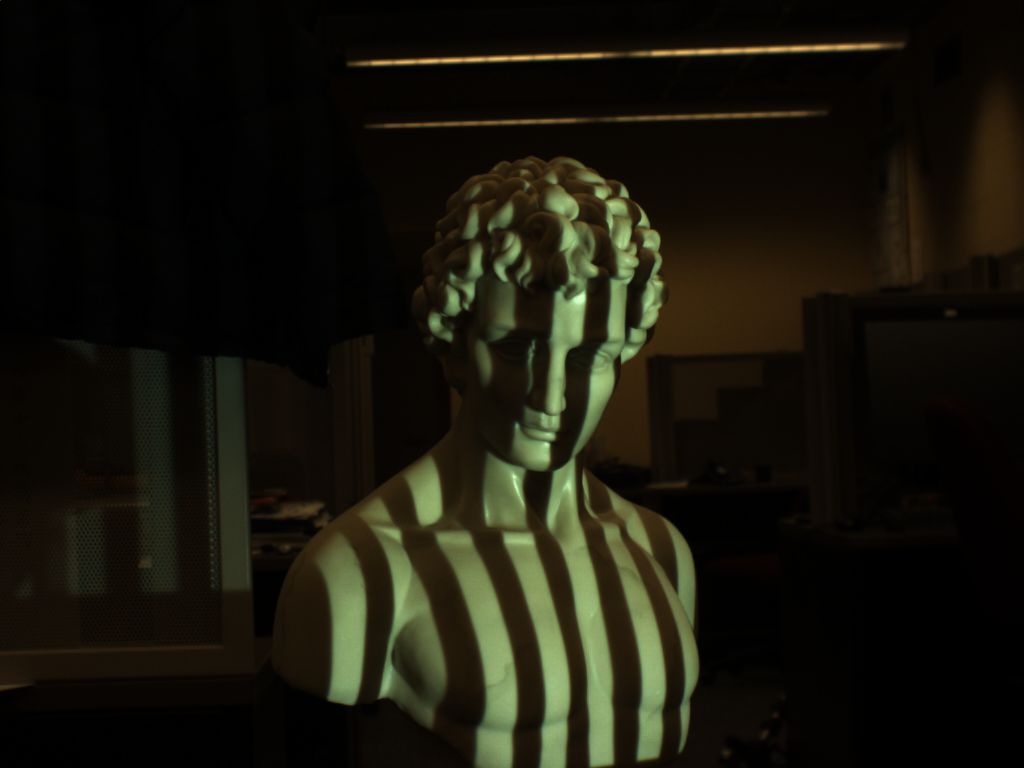

The setup used for this project consists of a projector projecting a gray code pattern on an object and two cameras looking at the object. Each of the cameras have a resolution of 1024 x 768. To decode the graycode pattern for each point on the image plane,10 images forming a gray code sequence are projected onto the object successively and captured in both the cameras. The following figures show the images captured in both the cameras when the gray code stripes are projected onto different objects.

Figure 1: Gray code sequence projected onto 'man' and 'frog' objects .

By decoding the gray code sequence at each of the pixel locations,it is possible to determine which pixel in the projector plane corresponds to the pixel of interest in the image plane.This information can be used to recover pixels in the image plane corresponding to a given projector column.

2 Approach

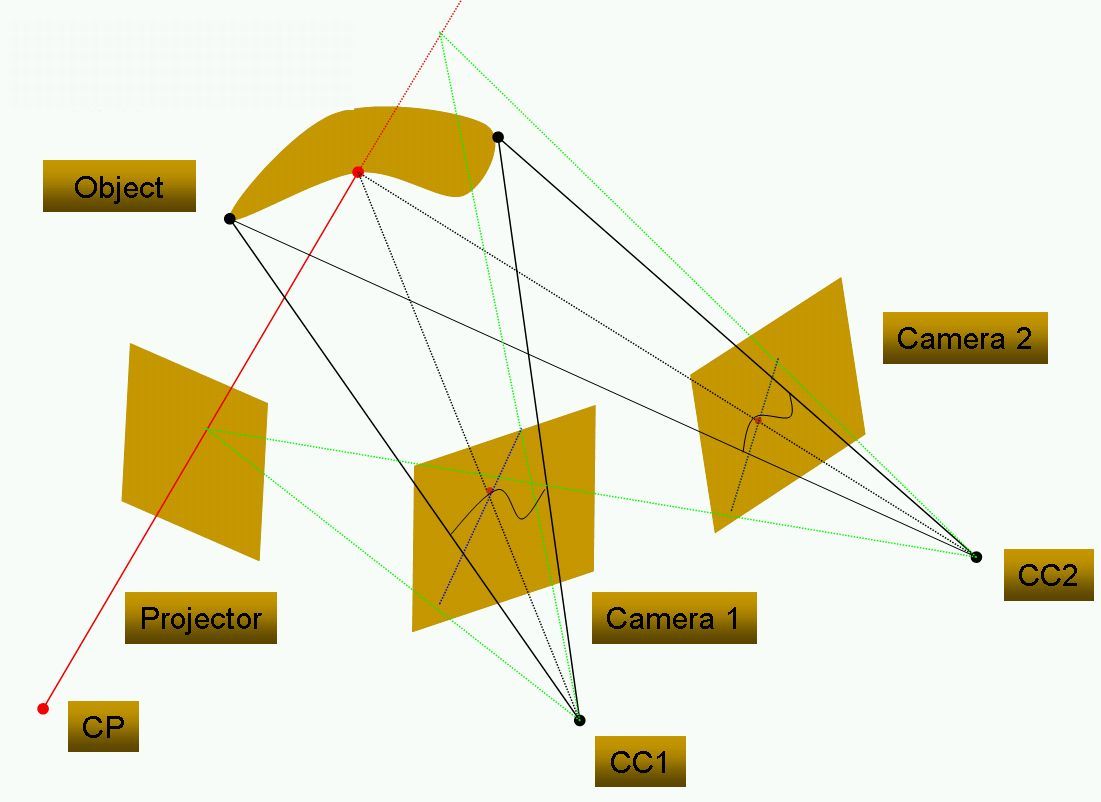

Projector plane is uniformly sampled along the columns and rays coming out from each point of a particular column are sampled in turn. These samples are projected onto the image plane (which are along the epipolar lines for the corresponding projector rays) for all the cameras.Now a given projector plane falling on the object is seen as a curve with varying shapes in each of the cameras.So,the projected samples on the image plane intersect these curves at different points or might not intersect at all depending on whether it is occluded or not.

Once the samples on the image plane along the epipolar lines are obtained,image intensities are interpolated at these sample locations. Finally,all the intensities are averaged together along the original samples drawn from the projector ray and peak is detected among these samples which gives the 3D location of the point.

Figure 2 shows the process of refining the point of contact for a ray of light emanating from the projector center.

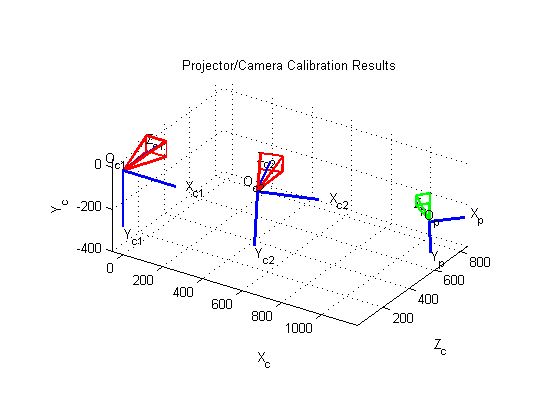

3 calibration of the setup

The calibration of the whole setup was done in two steps. In the first step, intrinsic parameters of each of the cameras was determined using a checkerboard pattern and MATLAB calibration pattern provided by Jean Bouguet. Also, the extrinsic parameters of each of the cameras wrt to the checkerboard pattern are stored. we now know the location of the checkerboard pattern in 3D wrt the cameras which can be matched to the 2D points in the checkerboard image that the projector is projecting. By standard non linear minimization procedures,the intrinsic parameters of the projector are obtained from the 2D-3D correspondences.

Figure 3 shows the calibration results,where the projector,camera 1 and camera 2 are all plotted in the reference frame of camera 1.

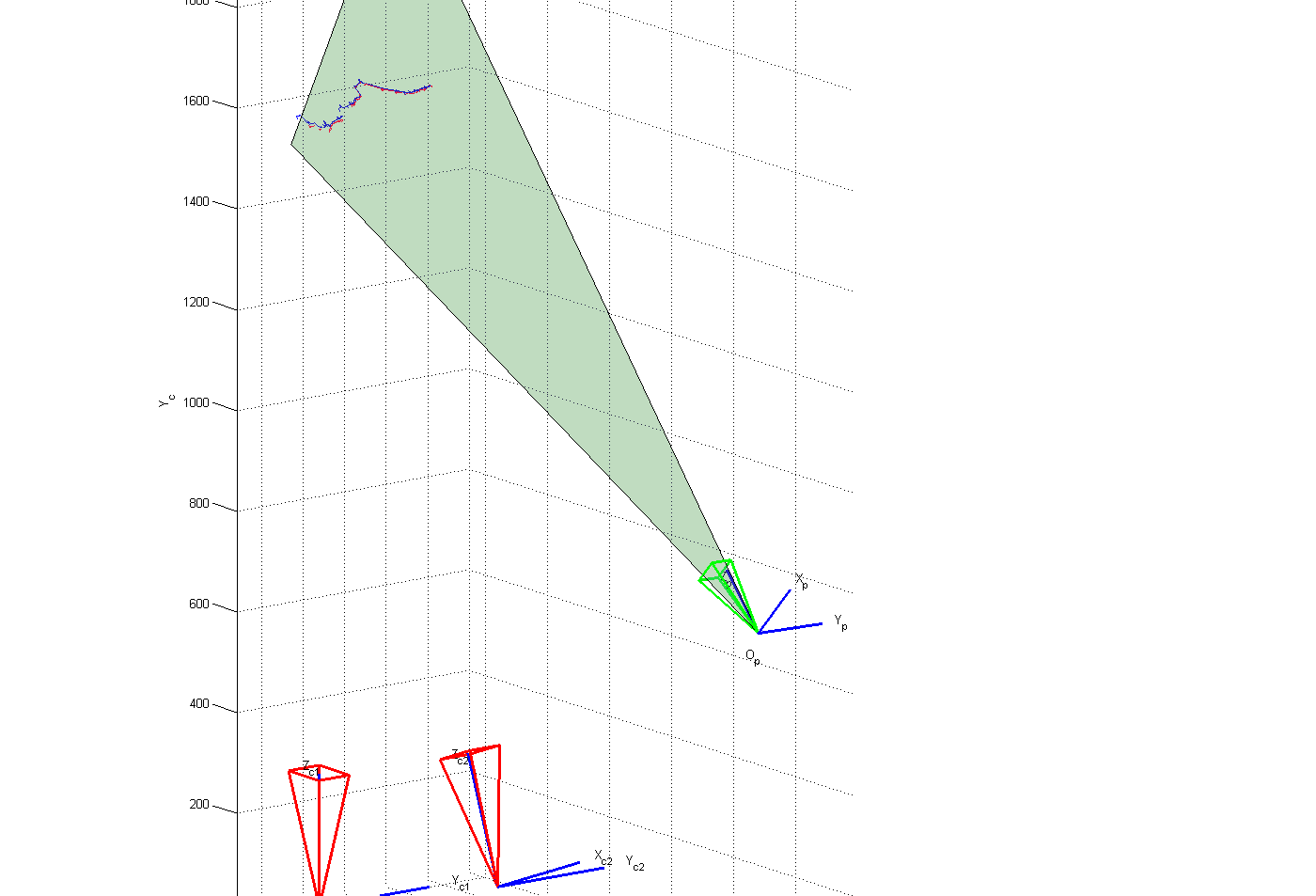

Once the system is calibrated and each pixel in the image plane is decoded,we are ready to take samples across each projector ray and do the process as described in section 1

The following figure shows the 'peaks' detected along a column of the projector for the 'man' object. The curves in red and blue correspond to camera 1 and camera 2 respectively

4 Results

Reconstructions obtained for 'man' and 'frog' using the multi resolution approach are shown below

Figure 4: Reconstruction results for 'man' .

Figure 5: Reconstruction results for 'frog'.

As a small evaluation, a 'planar' object was scanned using the setup and a plane was fit to the reconstructed points using the multi resolution approach. The resulting rms error was compared to the error obtained for the case of fitting a plane with just one camera. It was found that the rms error was lesser for the multi resolution reconstruction.

Results : frog, man